ASR System : KALDI

Automatic Speech Recognition System using KALDI from scratch

Hello Researchers! In this post, we will understand how to build an ASR system.

Kaldi is an open-source toolkit for speech recognition written in C++ and licensed under the Apache License v2.0. We can use it to train speech recognition models and decode audio from audio files.

Download and Install KALDI

You can skip this if you have already done the setup for KALDI.

git clone https://github.com/kaldi-asr/kaldi

Now, Go to the directory, open the Install file, and compile the KALDI Framework according to the instruction given on that file.KALDI takes time during installation, so utilize that time and have some dark chocolate coffee. (Do you know kaldi was a legendary Ethiopian goatherd who discovered the coffee plant around 850 AD)

Let’s Talk about Speech Recognition

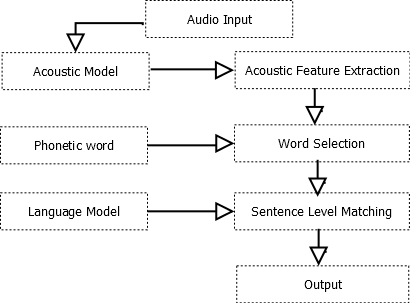

In general Speech Recognition framework:

1. Process incoming wav speech

2. Then from the wave signal, we extract acoustic features using an acoustic model

3. Linking those features to words or vocabulary or lexicon

4. Language model or grammar defines how words can be connected to each.

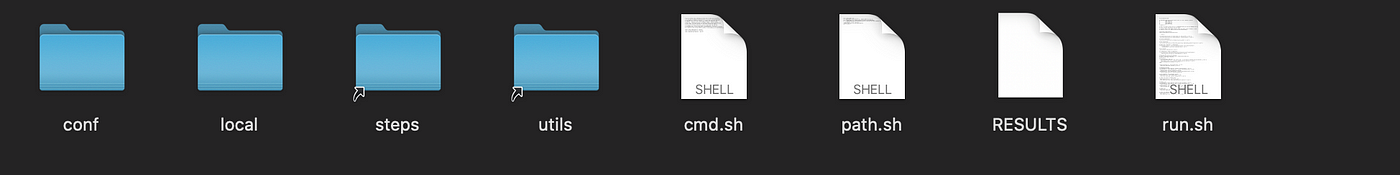

Let’s understand the folder structure

The “egs” folder contains example models and scripts for Kaldi. Make copy of any example folder and rename it. Below is your folder structure.

Conf- folder contains the configuration file for compute-and-process-kaldi.

local, Steps, and Utils- folders contain all the required files for creating language models and other supporting files for training and decoding ASR.

Data Preparation

The initial task is to properly curate the data as per KALDI format which includes the general files wav.scp, utt2spk, spk2utt, text, So create a data folder inside your directory. Inside the data folder create two more directories test and train. Also, put wav format audio files in your base folder.

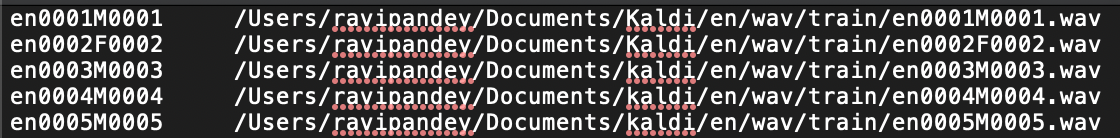

Make sure your wav audio file name has the below naming convention (This step we are doing for our ease not necessary)

The first 2 letters signify your language name (for example: for english- en or for spanish -sp) , Next 4 characters specify the speaker_id (suppose we have 100 different speaker data for the training then we can give id like 0001), Next character specifies the speaker gender(M or F) and the last four characters signify the sentence ID per Speaker. So your audio file name should be like en0001M0001 or en0002F0002.

Below are the steps for KALDI format data.

Create wav.scp file in your train folder and save it.

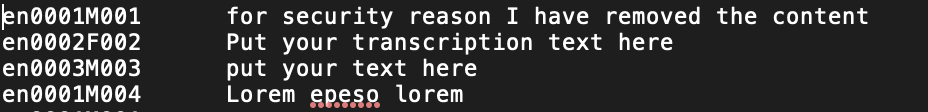

Create text file and save it

utt2spk: create file on <filename> <speakerID> pattern and save it

spk2utt: Sentences spoken by each speaker. <group same speaker per uttarance> and save it.

spk: create file list on <lang_name+speakerid> pattern. ex: <en0001M> and save it.

utt: create file lists on <unique utterance id> pattern and save it.

Repeat same steps for your test folder.

Language Data Preparation :

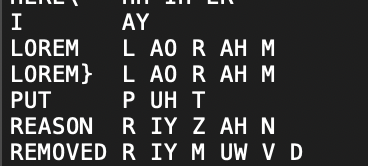

Create lexicon.txt file inside your data/local/dict/ folder. This file contains every word from your dictionary with its ‘phonetic transcriptions” , see below example.

The phonetic transcription of Reason can be R IY Z AH N or R ee Z ahn etc.

Below are the files that are required for Language model preparation.

nonsilence_phones.txt: This file lists nonsilence phones that are present in corpus like aa, umm, etc. Create this file and save it.

optional_silence.txt: type sil and save to data/local/dict/.

silence_phones.txt: Not contain the acoustic information but are present. (noise). type sil and save it. Now our next step is to create language model.

Language Model Preparation

Here we are working with the N-gram language model, copy the below script to your folder and replace the path, I have taken the n_gram=2 which means that I am building a bi-gram language model, you can change it with your requirement.

#!/bin/bash

#set-up for single machine or cluster based execution

. ./cmd.sh

#set the paths to binaries and other executables

[ -f path.sh ] && . ./path.sh

kaldi_root_dir='/Users/ravipandey/Documents/kaldi'

basepath='/Users/ravipandey/Documents/kaldi/egs/en'

#Creating input to the LM training

#corpus file contains list of all sentencescat $basepath/data/train/text | awk '{first = $1; $1 = ""; print $0; }' > $basepath/data/train/transwhile read linedoecho "<s> $line </s>" >> $basepath/data/train/lmtrain.txtdone <$basepath/data/train/trans#*******************************************************************************#lm_arpa_path=$basepath/data/local/lmtrain_dict=dict

train_lang=langmodel

train_folder=train

n_gram=2 # This specifies bigram or trigram. for bigram set n_gram=2 for tri_gram set n_gram=3

echo " Creating n-gram LM "

rm -rf $basepath/data/local/$train_dict/lexicon_c.txt $basepath/data/local/$train_lang $basepath/data/local/tmp_$train_lang $basepath/data/$train_lang

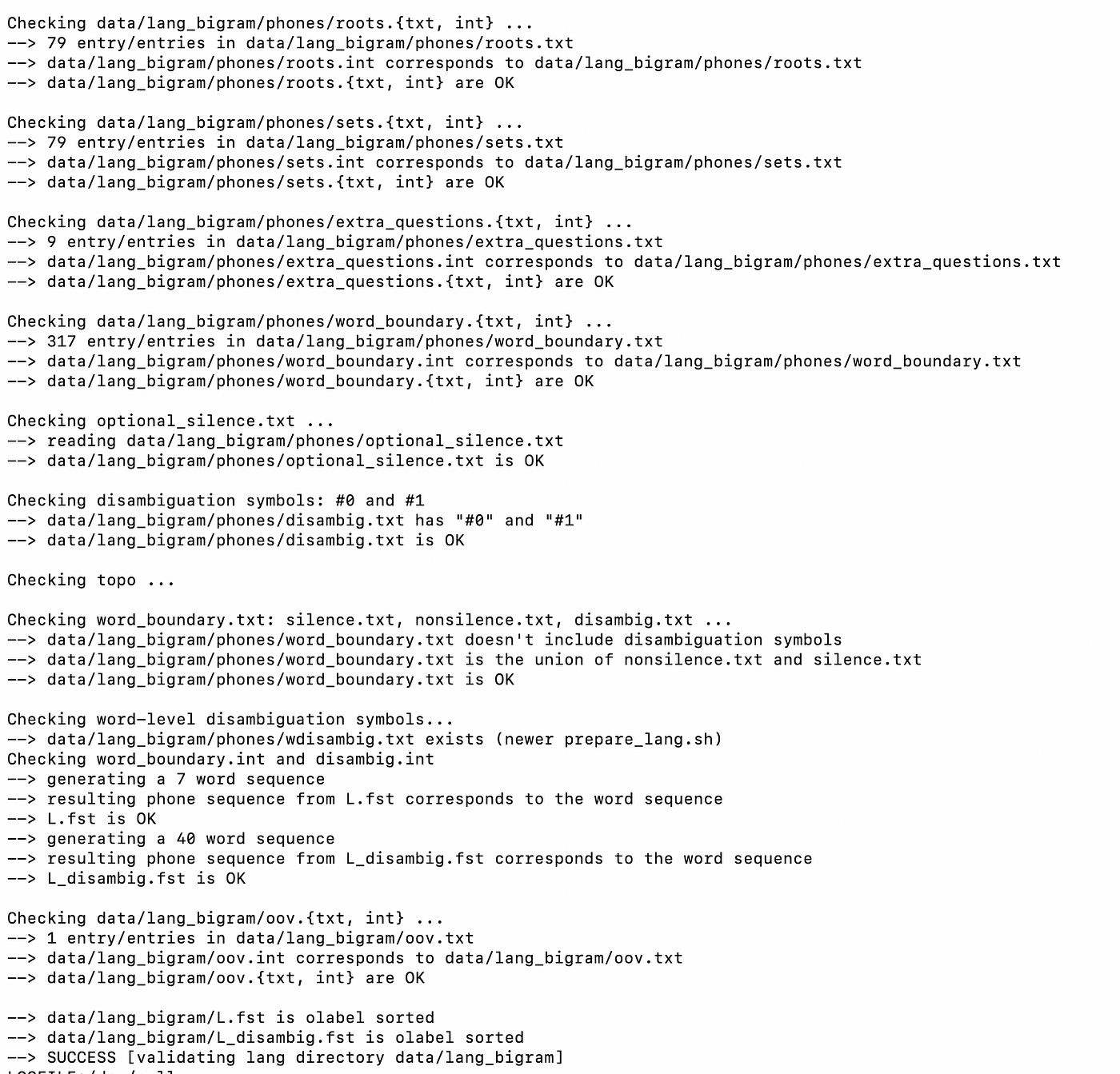

mkdir $basepath/data/local/tmp_$train_langutils/prepare_lang.sh --num-sil-states 3 data/local/$train_dict '!SIL' data/local/$train_lang data/$train_lang$kaldi_root_dir/tools/irstlm/bin/build-lm.sh -i $basepath/data/$train_folder/lmtrain.txt -n $n_gram -o $basepath/data/local/tmp_$train_lang/lm_phone_bg.ilm.gzgunzip -c $basepath/data/local/tmp_$train_lang/lm_phone_bg.ilm.gz | utils/find_arpa_oovs.pl data/$train_lang/words.txt > data/local/tmp_$train_lang/oov.txtgunzip -c $basepath/data/local/tmp_$train_lang/lm_phone_bg.ilm.gz | grep -v '<s> <s>' | grep -v '<s> </s>' | grep -v '</s> </s>' | grep -v 'SIL' | $kaldi_root_dir/src/lmbin/arpa2fst - | fstprint | utils/remove_oovs.pl data/local/tmp_$train_lang/oov.txt | utils/eps2disambig.pl | utils/s2eps.pl | fstcompile --isymbols=data/$train_lang/words.txt --osymbols=data/$train_lang/words.txt --keep_isymbols=false --keep_osymbols=false | fstrmepsilon > data/$train_lang/G.fst$kaldi_root_dir/src/fstbin/fstisstochastic data/$train_lang/G.fstecho "End of Script"save the above code as langmodel.sh and run sh langmodel.sh on your terminal. you will see the below output.

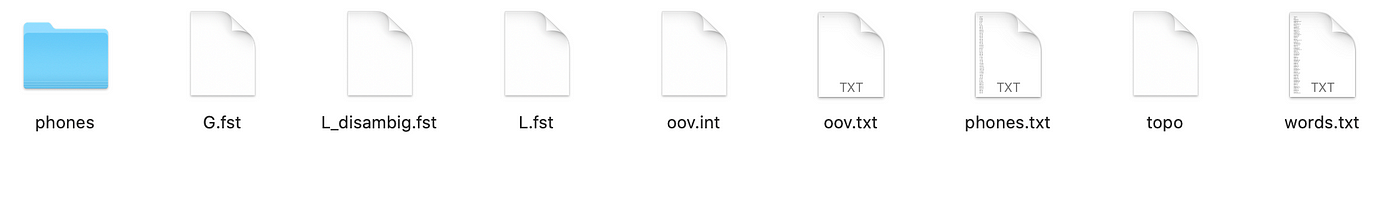

When you get a success message enjoy! You have created your first language model. For checking, go to your data folder and there you can see two directories local and langmodel, open langmodel and you will find below folder structure.

G.fst is a word level grammar finite state transducer

L.fst is a pronunciation lexicon finite state transducer

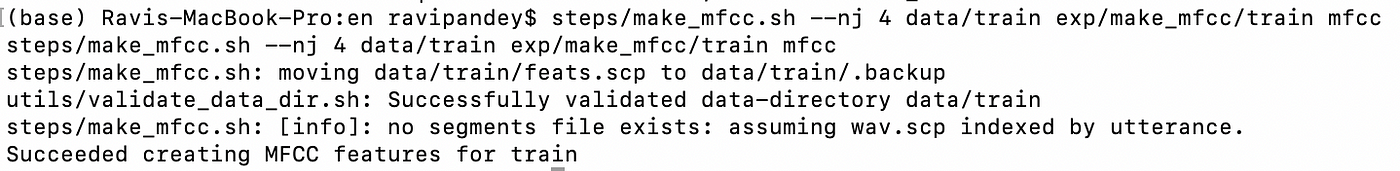

Feature Extraction: In this step we extract MFCC features of each utterance (audio). Open your terminal and run below command.

steps/make_mfcc.sh -nj 4 data/train exp/make_mfcc/train mfccmake_mfcc.sh used for computing MFCC coefficients.

nj- number of jobs, you can set it to according to your CPU.

data/train: folder path for which you want to compute MFCC.

exp/make_mfcc/train: log file stored in this directory.

mfcc: directory name where we store extracted feature.

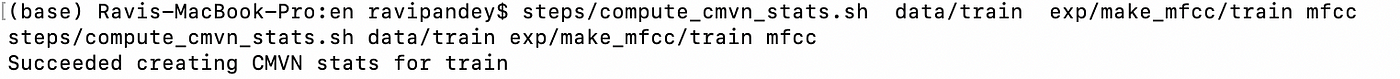

For extracting cepstral mean and variance statistics indexed by speakers run below command.

steps/compute_cmvn_stats.sh data/train exp/make_mfcc/train mfcc

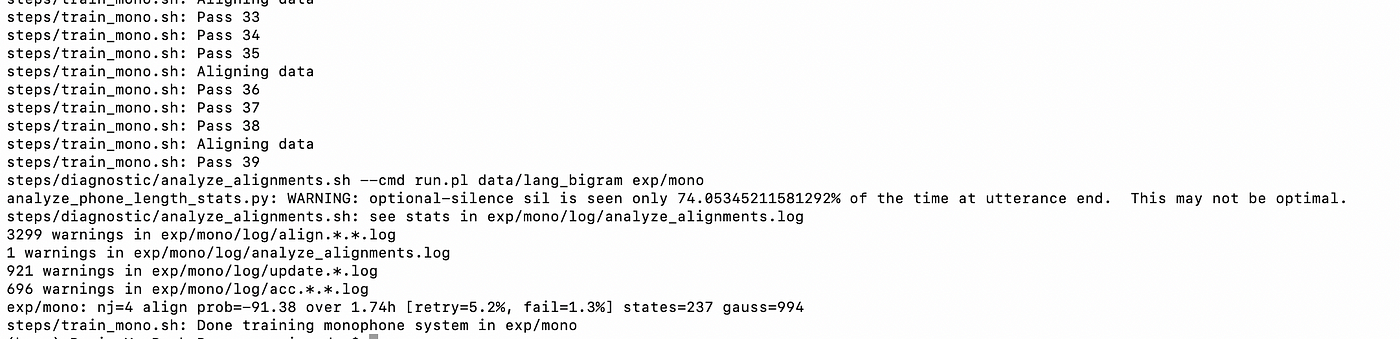

Acoustic Model Preparation

In this step we train the Monophone HMM system , by using below command.

steps/train_mono.sh --nj 4 data/train data/langmodel exp/mono

Run the below command for combining the acoustic model and language model to get the final model.

utils/mkgraph.sh — — mono data/langmodel exp/mono exp/mono/graphYeah! Finally, you have developed a training model for your own ASR System.

Decoding

For checking how your ASR system performing, use below command on unseen testing data

steps/decode.sh — nj 4 exp/mono/graph data/test exp/mono/decodeTo see the decoded results use below command:

utils/int2sym.pl -f 2- data/langmodel/words.txt exp/mono/decode/scoring/3.traNow we successfully build an ASR system for custom language. YAY !

If you like the post ! Hit clap ! Thanks !